Key figures in the artificial intelligence (AI) industry have signed an open letter urging the suspension of powerful AI systems, due to the profound risks they pose to humanity.

Twitter CEO Elon Musk, Apple co-founder Steve Wozniak, and Pinterest co-founder Evan Sharp are among those who want the training and development of AIs above the capability of GPT-4 to be halted for a minimum of six months.

According to the open letter, AI systems are becoming increasingly human-competitive at general tasks, and the development of nonhuman minds could eventually outnumber and outsmart humans, putting our civilisation at risk.

To address these risks, they called on AI labs to pause the training of these AI systems, and to come together with individual experts to develop and implement shared safety protocols for advanced AI design and development. These safety protocols should be rigorously audited and overseen by independent outside experts.

If this pause cannot be enacted quickly, they urged AI developers to also work with policymakers to “accelerate the development of robust AI governance systems.”

These should include new and capable regulatory authorities to oversee and track highly capable AI systems, a robust auditing and certification ecosystem, funding for AI safety research, as well as well-resourced institutions for coping with the economic and political disruptions that AI will cause.

The open letter emphasises that it is not calling for a pause on AI development in general, but rather, a call to step back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities.

AI research and development should instead focus on making today’s powerful, state-of-the-art systems more accurate, safe, and transparent.

It is essential to have guidelines in place for AI development

Considering the almost-boundless potential of AI, it’s no surprise that tech giants such as Meta and Microsoft are racing to create more capable AI models and gain first mover advantage in the space.

But what’s important to note is that these companies exist with a main purpose to make money. Any company that prioritises social benefit over profit would most likely cease to exist.

This is why it is essential to have regulations in place for AI development, as companies race to put out AI tools with their own interests in mind.

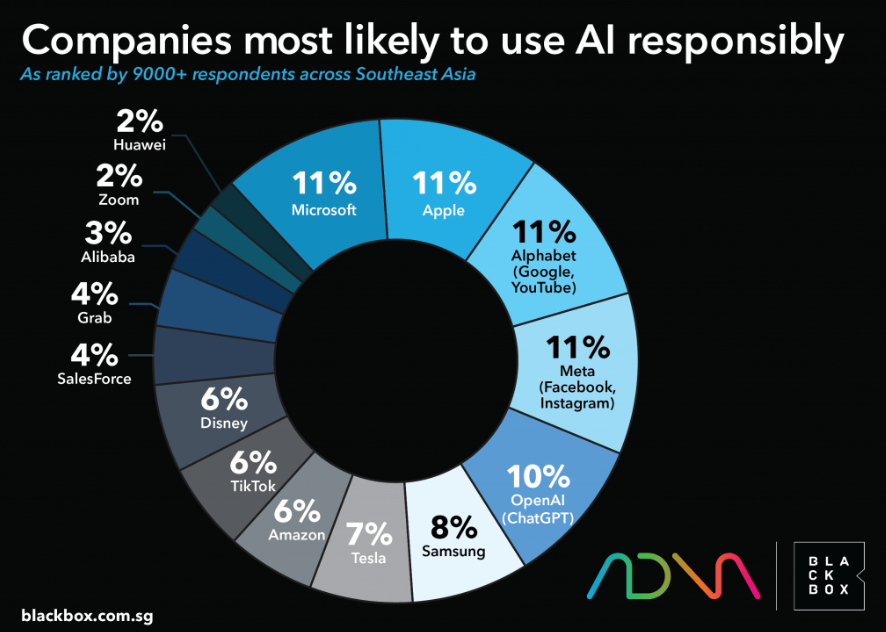

Currently, Southeast Asians including Singaporeans are ambivalent on the positive and negative aspects of AI, particularly with regards to how these tech giants are viewed, according to a Blackbox-ADNA survey.

Most of them seem to think that Microsoft, Apple, Alphabet (the parent company of Google), Meta and Open AI, would develop and utilise AI responsibly.

On the other hand, Meta comes in first as the company that would least likely utilise AI responsibly. This comes after Mark Zuckerberg’s announcement of the company’s pivot into AI.

However, that being said, the AI industry is still very much in its infancy, which makes it way harder to regulate. Regulation at a time where the industry is rapidly growing could also impede innovation.

In fact, a study from Stanford University found that attempts to regulate AI as a whole would be “misguided”, as there is still “no clear definition of AI”, and the risks and factors involved can vary greatly across different fields and industries.

The topic surrounding the regulation of AI is a complex one, but what’s for sure is that AI would definitely require regulation, sooner or later.

Featured Image Credit: The Siasat Daily