Disclaimer: Opinions expressed below belong solely to the author.

After a year in beta, Google’s Search Generative Experience, i.e. Google’s Gemini AI model providing direct answers or auto-generated summaries for selected search queries, is now being deployed to users around the world as “AI Overviews”.

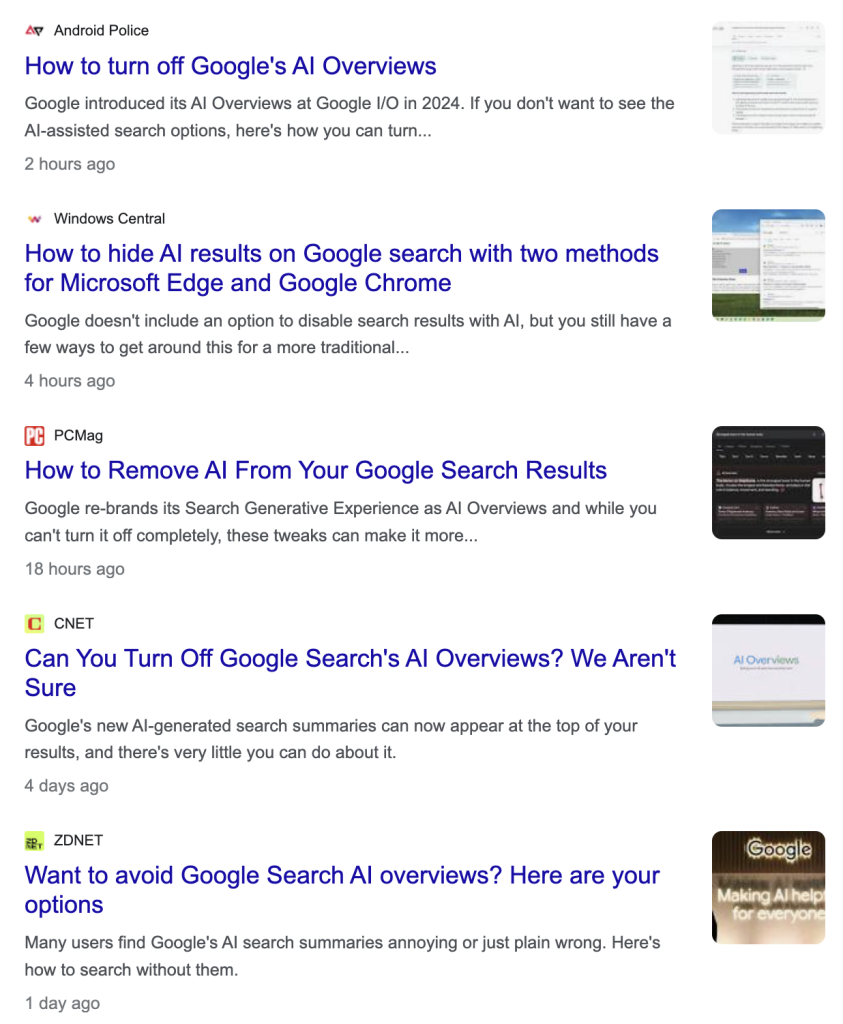

And the early feedback isn’t good, with media outlets rushing to provide advice to frustrated users on how to get rid of the feature that is already annoying so many of them.

Under normal circumstances this could be considered a disaster. Imagine spending 12 months fine-tuning a feature only for most of your customers to reject it.

But what if that’s what was intended all along?

This may read like a bizarre conspiracy theory, but humour me a minute and it may soon seem less outlandish than you think.

Cui bono?

When identifying crime suspects, ancient Romans started by asking a simple question “cui bono?” — “who benefited?”. I think it’s where we might start as well, asking: “who would benefit from offering an inferior AI experience?”

Normally, a feature that fails to gain traction sets off alarms at the company. Apologies and fixes follow.

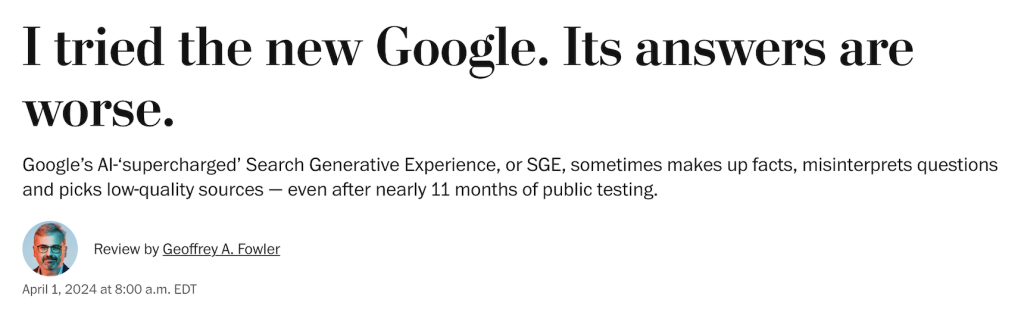

This was the case whenever ChatGPT was caught doing something weird — a patch was already on the way. But Google has spent a whole year working on a product that by account of many remains half-baked.

Just last month the internet was awash with critique of the AI-generated search answers and as recently as early May Gemini’s advice on how to pass kidney stones was to drink two litres of urine.

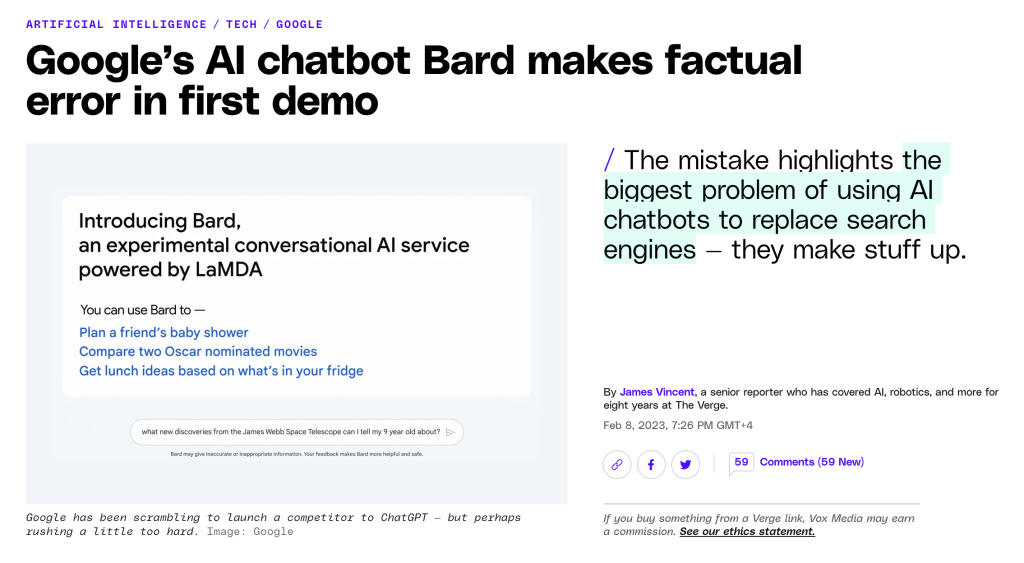

And this isn’t the first time either. Bard, the predecessor to Gemini, made wrong claims about James Webb Space Telescope being the first to capture a photo of an exoplanet, while just last week, the video assistant powered by Gemini suggested fixing a film stuck in a classic camera by opening its back, which would inevitably expose it to light and ruin anything recorded on it thus far.

Of course Google’s chatbots aren’t unique in giving bad or bizarre advice. Its competitors, including the leading OpenAI, had their fair share of blunders. However, the difference is that they typically addressed them promptly, providing fixes and generally improving their models quite quickly over time.

Meanwhile, the search giant is stumbling even after spending a year reportedly fine-tuning its technology.

You just can’t escape the impression that Google is really dragging its feet on AI, rather than treating it as a priority investment.

And there are good reasons why it would.

Plugging a $175 billion hole

It seems that the company’s interest in artificial intelligence was dictated not by any desire to develop a superior technology which could power some amazing future products, but by the need to ensure it has a stake in it and figure out how to monetise it before it comes to obliterate its current main source of revenue: online search advertising.

Google ads displayed by search results on the site are responsible for 56% of its over $300 billion annual haul, bringing $175 billion in revenue last year.

Understanding that the first wave of AI solutions would come in a form of simple bots providing information, it must have been clear to Google’s leadership that they could flip the entire web search model on its head. It’s a mortal threat to the company.

Instead of having to browse through dozens of links — with ads accompanying them — people would just get an instant, accurate reply from an intelligent system trained on all of humanity’s information.

Where do you place an ad in that?

Even in queries with a commercial intent the space for inclusion of advertising would be greatly diminished as users would no longer have to scroll through many pages which provided ample space available to dozens of companies to put their ads there.

AI threatens to obliterate a tremendous amount of advertising real estate and greatly diminish anything left for ads next to AI Overviews.

At the same time, much like every other AI business, Google is struggling to find a way to make money from the new tech.

How do you plug a hole worth $175 billion with technology that is burning so much money that Sam Altman has to tour the Middle East monarchies trying to secure literal trillions for future development?

Yes, there’s some cash to be found in B2B solutions but it’s not enough. OpenAI’s revenue ballooned from nothing to $2 billion last year, but the company is still bleeding money. Meanwhile, billions of consumers won’t pay even 20 bucks per month to use a tool like ChatGPT.

At the same time, however, as is the case with every new technology, AI startups throw free features at people just to get them to sign up while they figure out monetisation later. All of a sudden the tired old Google search could be squeezed in the market that it is relying on.

The company, however, is in a bind, because it stands to lose no matter how it responds.

Google’s lose-lose future

Despite having significantly more resources than OpenAI, it’s clear that Google was quite conservative with investment over the previous years.

It wasn’t until ChatGPT took the world by storm, prompting Microsoft to drop $10 billion into OpenAI, that Google woke up and cobbled together a functioning product, Bard, out of the bits and pieces from projects carried out on the side.

Building a leading AI solution doesn’t seem to have been its motivation, because that would only accelerate change and inevitably disrupt its core business. Why cut the branch you’re sitting on?

It has always had the money but it would gain very little from being a leader in a race to, effectively, destroy itself.

On the other hand it cannot, of course, ignore the challenge, because that would mean the rules of the AI-powered world would be dictated by somebody else. It isn’t acceptable either.

It cannot leap ahead, it cannot stay back. So, what could it do to protect itself?

Since it is impossible to stop technological progress, the next best thing would be to tarnish it a little, instilling doubt in people as to its reliability.

How to eat your cake and have it too

There are of course many areas in which artificial intelligence is both of benefit to the world and a non-issue for Google. Image or video editing for instance, crunching big datasets to extract valuable information, identifying patterns in scientific, technological or security applications. The list is very long.

If only people could enjoy all of the above but still search the web manually — that would be the best compromise for Google.

But how do you fight a technology so vastly superior to clicking through dozens of spam links and not always relevant ads on a page that hardly changed since 1998?

There may be a way: what if people didn’t quite trust the search done by AI?

Being a near-monopoly Google has the advantage of being able to shape what billions of us experience on the web every day, as search is often our gateway to it.

Releasing a half-baked new feature, frustrating millions of users into wanting to remove it is ruining that important first impression people have of artificial intelligence. Not only it doesn’t know everything, but it can give you really bad advice, so it’s better be safe and do your search the “old way”.

Would anybody in Google complain if that was the outcome?

The company has no real competitors and Sam Altman a few days ago dismissed the rumours that OpenAI would make a play to enter the search business in the nearest future. It’s likely to eventually but by then people will find it hard to trust any AI-powered search engine.

If Google couldn’t make it then how can we trust anybody else?

Startups are forgiven more. When ChatGPT hallucinated we reminded ourselves that it’s still a technology in development. But when an established giant like Google makes something a feature after testing it for a year, it becomes a defining experience. In this case: a bad one.

I’m not suggesting here that Google is deliberately making Gemini provide incorrect answers — that could be near impossible, considering millions of different queries that people enter every day.

What I’m saying is that it wouldn’t even be necessary, since an imperfect application of AI is good enough to create that bad impression already.

It’s really hard to believe Google really failed to make AI Overviews reasonably reliable even after a year of tests. And if it did, then why is it trying to dump it on all of us, despite objections being raised left and right?

Nobody is forcing it to, after all, and bad user experience can kill any product. But maybe that’s the goal?