Just a few days ago, I wrote about National University of Singapore’s own AI model, based on Stable Diffusion, that allows the translation of brain wave activity into images and video.

But the very same mechanism can be used to interpret brainwaves as speech in patients who lost the ability due to brain damage.

This is what makes generative AI so enormously valuable — its ability to recognise patterns from hundreds, thousands or even millions of data points, and translating them into desired output.

It’s really a lot like learning languages, but far more quickly and across far more than just verbal communication.

In just such a case, an American woman named Ann regained her ability to communicate with speech and facial expressions using an AI avatar, via a brain implant and AI model translating her brain activity.

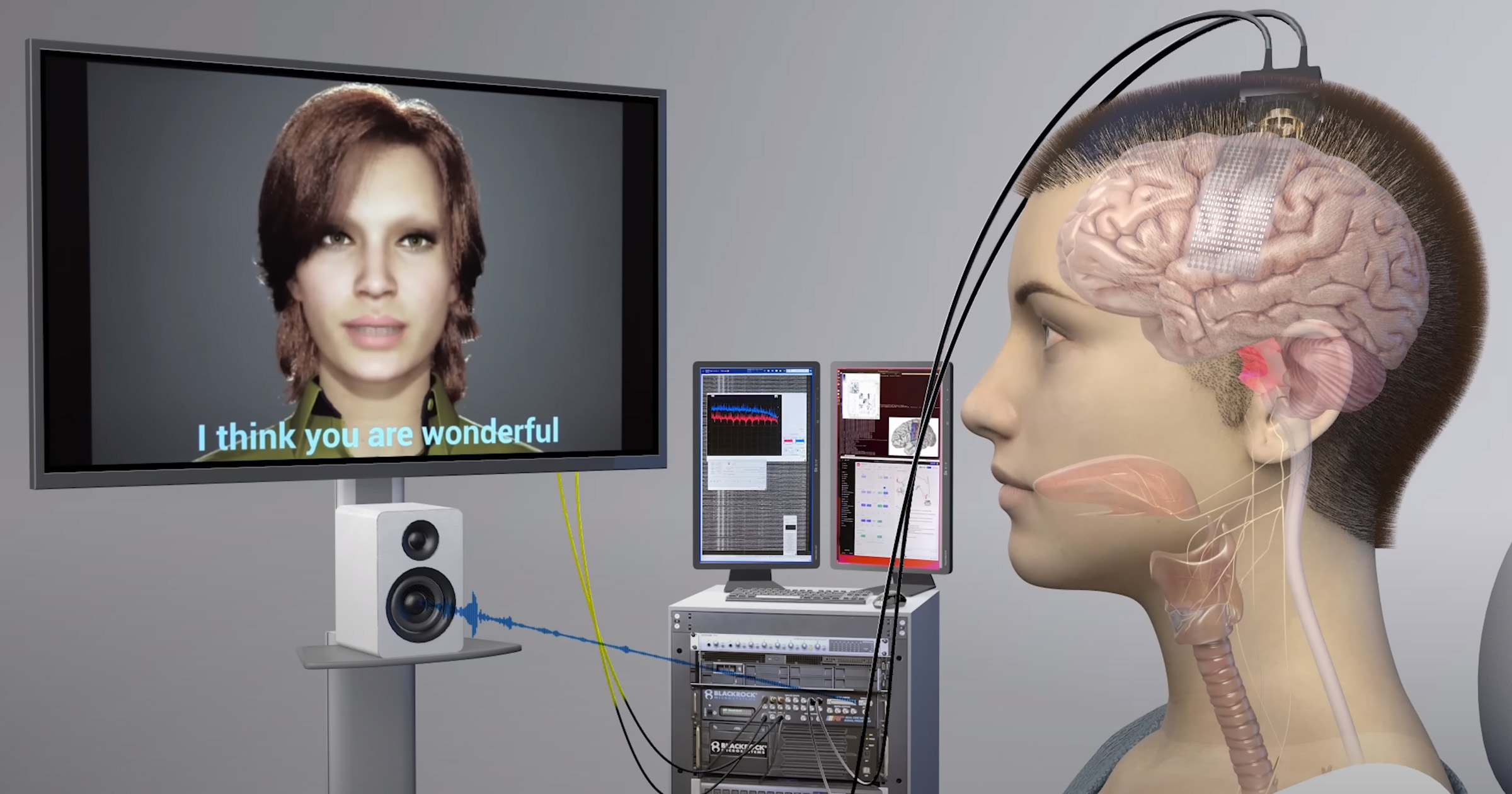

The system — developed by researchers at University of California, San Francisco, and the University of California, Berkeley — allows her to speak at a pace of about 62 words per minute, which is equivalent to 40 per cent of normal speech, at a (current) error rate of 23.8 per cent:

Ann, a Canadian math teacher, suffered a brainstem stroke in 2005 at just 30 years of age, married barely two years earlier, with a 13-month daughter and eight-year old stepson.

“Overnight, everything was taken from me.”

After several years of therapy, she regained minor motor skills in her face, that allowed her to laugh or cry, and enabled her to slowly type on a screen using minute movements of her head.

“Locked-in syndrome, or LIS, is just like it sounds,” she wrote. “You’re fully cognizant, you have full sensation, all five senses work, but you are locked inside a body where no muscles work. I learned to breathe on my own again, I now have full neck movement, my laugh returned, I can cry and read and over the years my smile has returned, and I am able to wink and say a few words.”

A direct brain implant that interprets her brain activity on the fly and synthesises speech through a digital persona picking up not just words, but facial expressions and body movements, would be a massive improvement in her ability to communicate with people and independently use devices such as smartphones or computers.

“When I was at the rehab hospital, the speech therapist didn’t know what to do with me,” said Ann. “Being a part of this study has given me a sense of purpose. I feel like I am contributing to society. It feels like I have a job again. It’s amazing I have lived this long; this study has allowed me to really live while I’m still alive!”

Translating electricity into words and movements

The decoding process required Ann to receive a paper-thin implant consisting 253 electrodes, placed over an area that was identified as critical for speech.

Unlike in the NUS experiment, where people were told to visualise certain things and AI then interpreted it as images and video, here, the person has to attempt to actually speak as they normally would.

It’s not about imagining words in your head, but sending signals to muscles, even if they no longer respond due to injury. This activity is then picked up by electrodes and sent to the AI model that was previously trained with the person.

Such training is performed just like every other exercise using AI — through repetition of the input content until the algorithm begins to recognise patterns and associate them with the desired output.

In Ann’s case, it used 1,024 commonly used phrases, broken up into 39 phonems — sounds that make up all of the words in the English language.

According to researchers, focusing on phonems instead of specific words — which are easier to pick up through repetitive training than thousands of words in the dictionary — increased the accuracy and accelerated the learning process by three times.

“My brain feels funny when it hears my synthesised voice,” Ann wrote in answer to a question. “It’s like hearing an old friend.”

To add some body language to the interaction, the team used another AI-powered tool, developed by Speech Graphics, a company otherwise known for its work on character animation in video games such as The Last Of Us Part II, Hogwarts Legacy, High On Life and The Callisto Protocol. Researchers then synchronised the signals received from Ann’s brain with corresponding facial movements and expressions to produce a live 3D avatar that moves and speaks together with her.

“My daughter was one when I had my injury, it’s like she doesn’t know Ann … She has no idea what Ann sounds like.”

Bringing people back to life

Because so much in our lives has become digitised, giving people who lost the ability to communicate a seamless, highly accurate solution that connects directly to their brains and doesn’t require muscle movements, is literally bringing them back to life, as they can do so much with computers and internet access.

One day, they could barely type, using highly sensitive manual tools or aids utilising minuscule movement recognition to slowly type out their messages (like the late Stephen Hawking, for instance). And the next day, they are capable of engaging in accurate, real-time conversations.

“Giving people like Ann the ability to freely control their own computers and phones with this technology would have profound effects on their independence and social interactions,” said co-first author David Moses, PhD, an adjunct professor in neurological surgery.

Like every innovation, it still requires polishing before it can become a widespread solution for people with disabilities affecting the brain. That said, the leap is already so huge that it is extremely useful even at this stage.

It’s not some nebulous promise that may take a decade to see limited implementation — we could see it gaining global traction in just a few years. For thousands, if not millions, of patients around the world, it could be a real life-changer.

Featured Image Credit: UC San Francisco via YouTube