Disclaimer: Any opinions expressed below belong solely to the author.

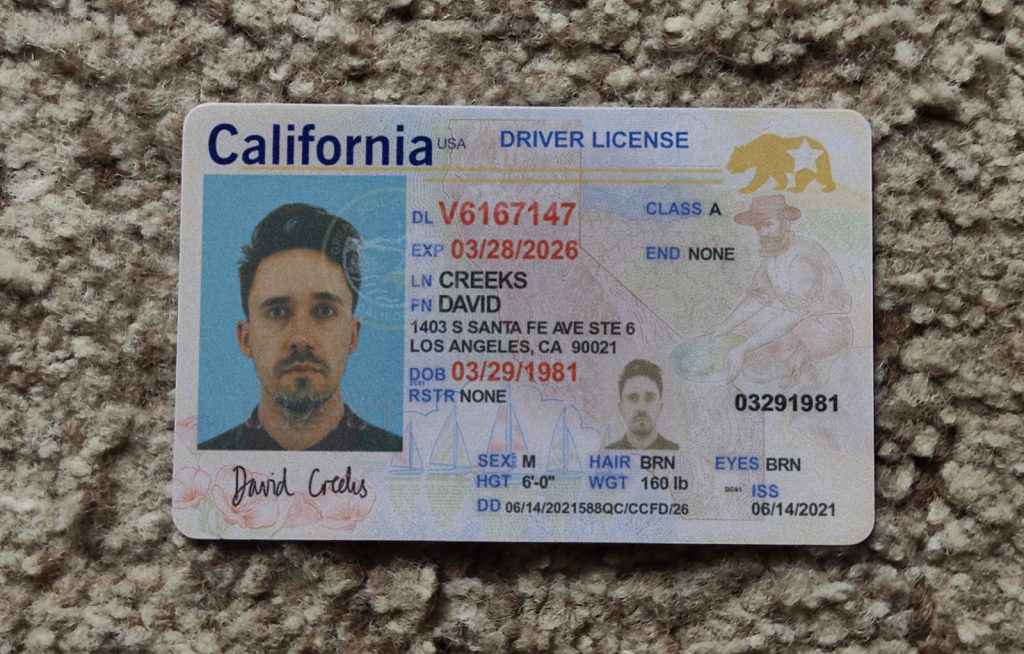

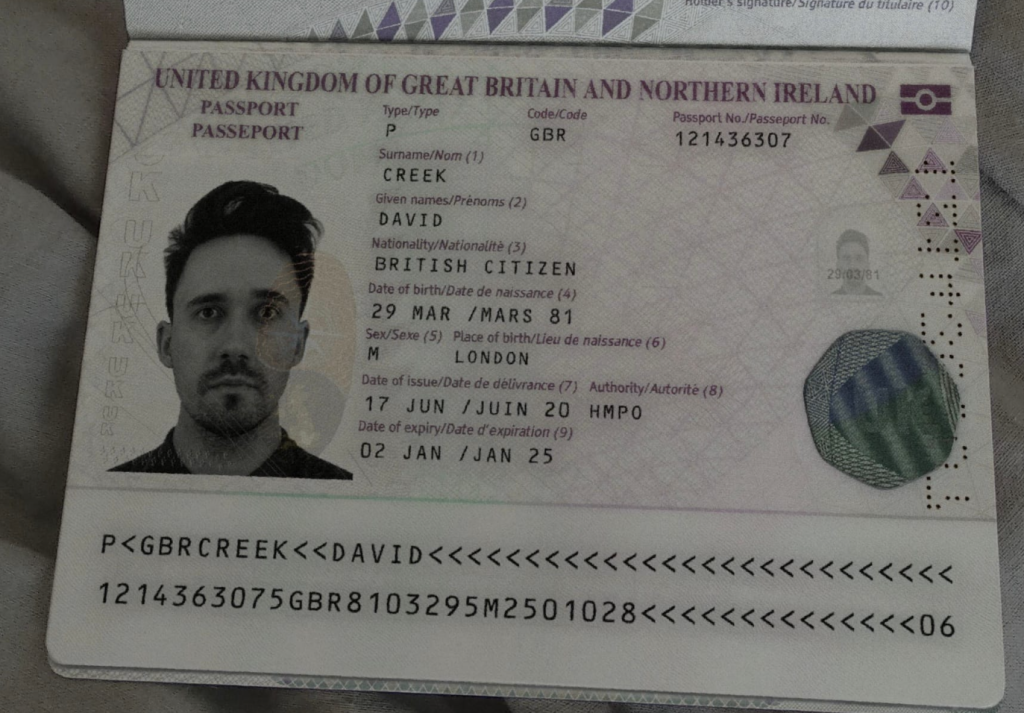

“The era of rendering documents using Photoshop is coming to an end,” announces OnlyFake’s Telegram account. The underground site is offering (or, as of now, used to as it went dark for the time being) realistic images of fake IDs like passports or driving licenses from 26 countries for just $15.

The documents are generated in seconds using whatever credentials and facial pictures you choose (or generate them for you), and placed in surroundings that mimic real spaces an average user would take a picture of their document on – like furniture, bedding, carpets and so on.

In a conversation with 404 Media, who ran this story, OnlyFake’s founder “John Wick,” claimed that the IDs could bypass KYC checks at Binance, Kraken, Bybit, Huobi, Coinbase, OKX and even more traditional, mainstream Revolut.

404 Media itself has managed to pass the verification process on OKX using the driver’s license pictured above, proving that criminals using AI to hide their identities may be more than a step ahead of fintech platforms, with no easy solution to the problem.

Can’t trust your eyes anymore

Even a few years back, some companies requested you take a photo of your ID alongside your actual face or, like Facebook occasionally does, use a short video to prove you’re a human instead.

But AI can fake this today as well, as one company in Hong Kong learned recently, having been scammed through a Zoom call, where all participants except for the targeted individual were AI-generated personas of the company’s personnel (including a UK-based CFO), successfully convincing him to wire out $25 million dollars.

AI has gotten so good so rapidly that humans are struggling to catch up.

Many are advocating (and employing) the use of AI to fight AI fraud, including in security and user/customer identification.

But as AI is getting better very quickly the question arises – how long is it before it can mimic real life perfectly? What then?

It seems that the only real solution may be using biometric data that is unique to a particular person and could be checked against a central database of verified human beings. That, however, would be another security nightmare and a very serious threat should such a database ever get accessed by less-than-savoury characters.

Soon, the choice may very well be between fighting an uneven battle against fake identities or risking upending the lives of real people, should their identities be used for criminal purposes.

It’s that or back to in-person visits in physical locations to do anything that requires confirming our identity.

As we know, criminals have no scruples doing that today, with whatever leaked information they are able to get their hands on.

Following the 404 Media investigation, the OnlyFake site went offline, but it’s clear that doesn’t really change anything. Anybody with as little as a capable PC running an open-source image generation model could train it to produce similar images, with only some fine-tuning required to make them accurately realistic.

The genie is out of the bottle and there’s no putting him back in it.