Disclaimer: Unless otherwise stated, any opinions expressed below belong solely to the author.

A few days ago OpenAI announced the release of its latest model, the “o1”, dubbed Strawberry, which has been expanded with more complex reasoning ability, akin to that of advanced degree graduates in physics, chemistry, or biology.

It is also much better at coding and mathematics, scoring 83 per cent on the qualifying exam for International Mathematics Olympiad, compared to just 13 per cent that its older brother, GPT-4o, managed.

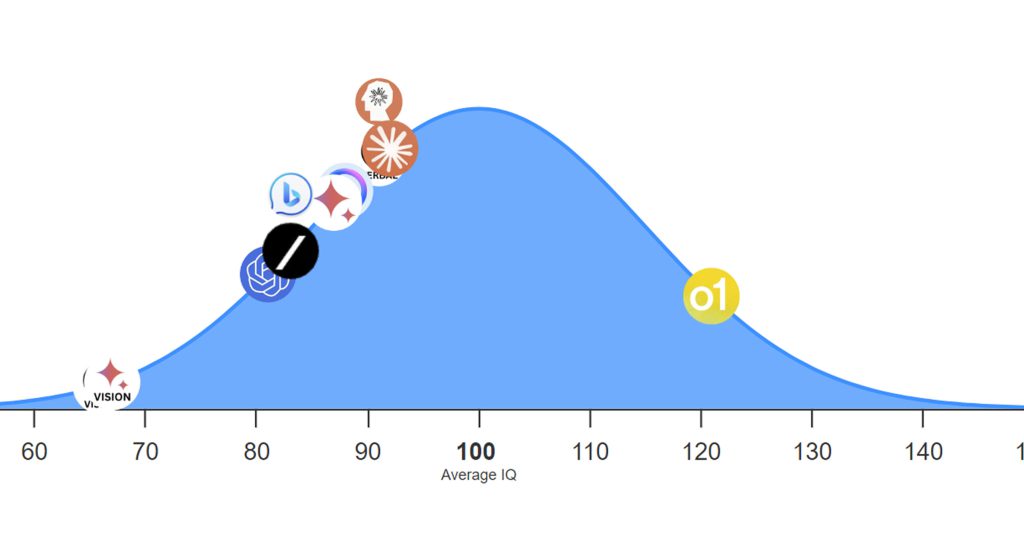

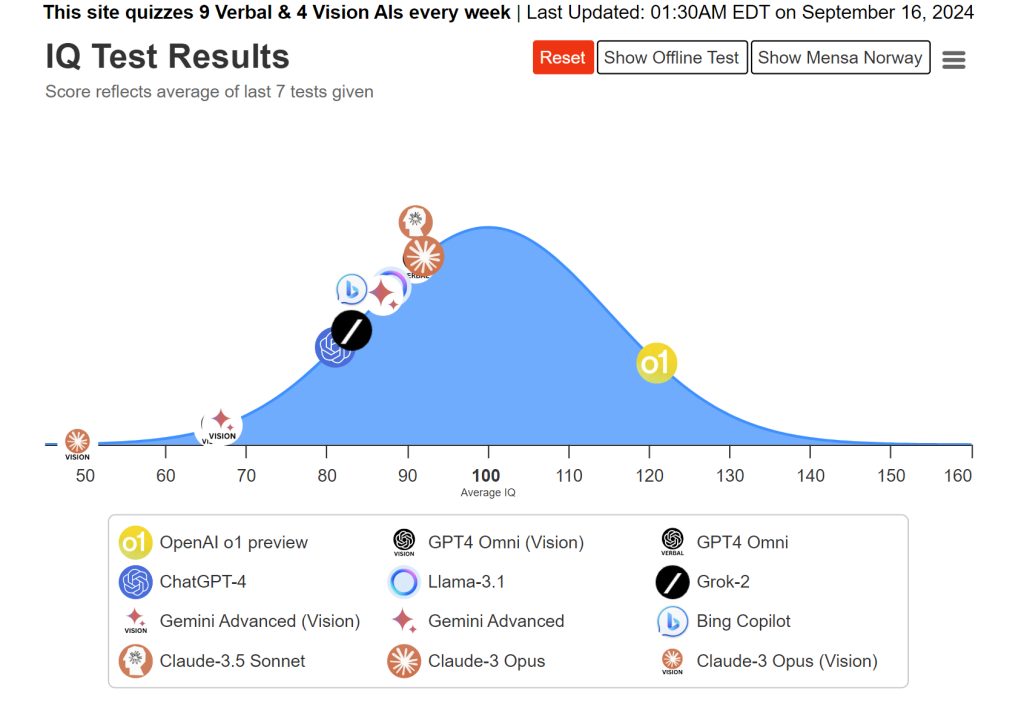

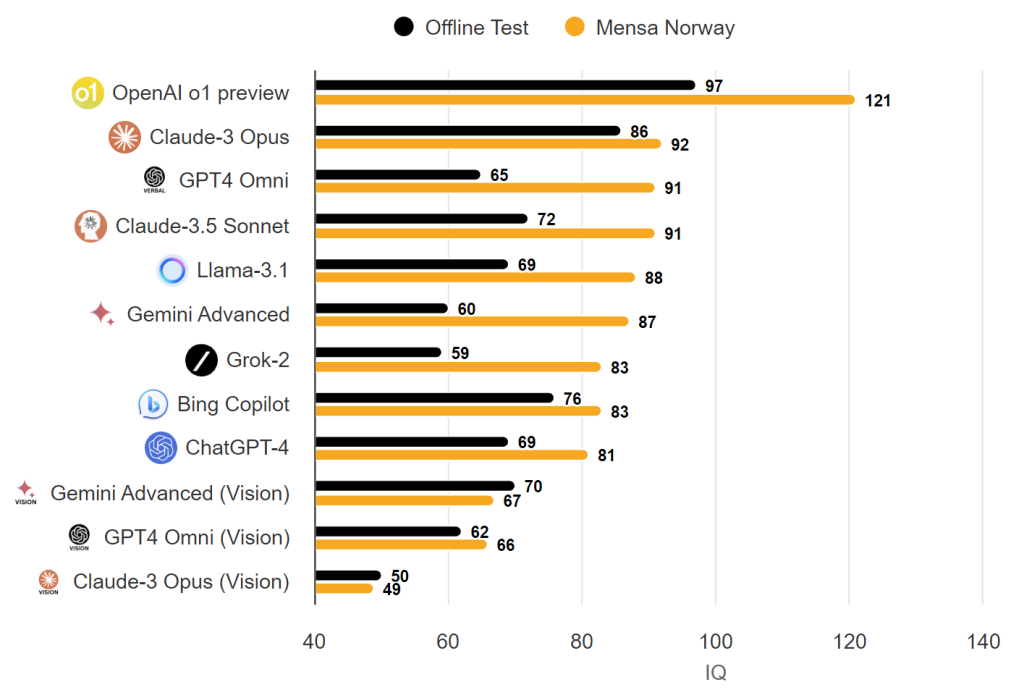

Elsewhere, it has also been presented with an IQ test sourced from Mensa Norway, testing its reasoning capabilities—and not only has it destroyed all competitors, outscoring them by 30 points or more, but it’s also reached IQ level of 120 points:

Not only is it the first model to jump above the average IQ of 100 points, but the result puts it above roughly 91 per cent of humanity. Only 1 in 10 people have comparable cognitive ability.

Cause for celebration or concern?

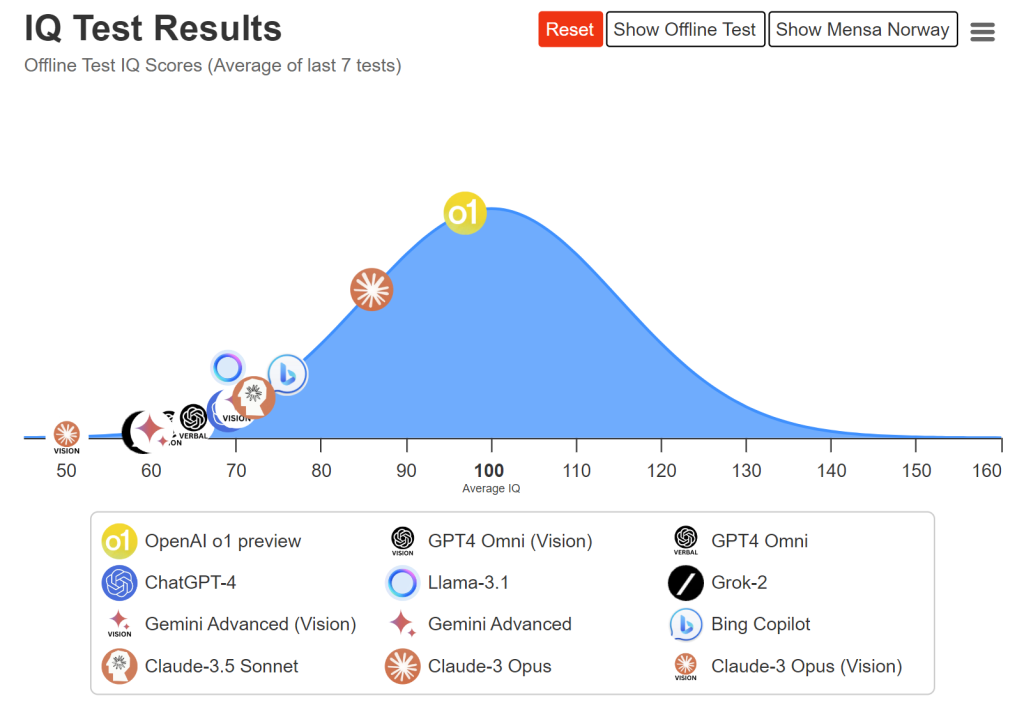

Let’s start by saying that results of such testing may depend on the models having access to information about them ahead of time. That’s why a follow up, completely new and offline test was conducted, to see how all of them would do with questions they have never seen.

Predictably, the results are less impressive, however, the o1 maintains its lead and scores around the human average:

Here’s a comparison of both:

Armed with these two findings, should we celebrate or be worried? And over which should it be: that AI may not be quite on a genius level yet or that it may very well soon reach it?

The truth is that if we want to depend on Artificial Intelligence in the future, it has to possess cognitive capabilities of the smartest humans, allowing it to recognise patterns and react to them.

This, of course, makes many of us uneasy, as companies like OpenAI are quite deliberately creating beings that are already as capable as most humans and in some cases even more so than vast majority of us.

The thing about intelligence, however, is that it doesn’t make anybody evil. In fact, it doesn’t even make humans endlessly capable—it’s just a reflection of a set of cognitive abilities, which usually find practical application in sciences.

After all, some of the smartest people were socially awkward, bookish nerds of physics, math or chemistry, not tyrants. Why then should we fear that similarly capable machines turn against us or harm us in other ways?

The bigger threat, of course, is that they may begin taking our jobs—starting with some of the better paid ones.

On the other hand, however, it’s easy to argue that supremely smart humans are rare already, so investing in AI will allow us to use highly capable intelligent machines in many more fields than we can send humans to today.

Fear followed every technological revolution but, ultimately, it has time and time again been proven wrong. That’s why, I think, there are good reasons to be optimistic about this one as well.

Featured Image Credit: TrackingAI.org