To this day, there is still no telephone nor television installed in the guest rooms of the Asilomar State Beach and Conference Grounds. Wifi connection was also only made available recently. This is to keep the rustic allure of the nearly 70-hectare wide compound dotted with 30 historic buildings that rest near the picturesque shores of Pacific Grove in Southwest California.

Contrary to its timeless charm, Asilomar experienced a remarkable convergence of some of the world’s most forward-thinking intellects in 2017. Over 100 scholars in law, economics, ethics, and philosophy assembled and formulated some principles around artificial intelligence (AI).

Known as the 23 Asilomar AI Principles, it’s believed to be one of the earliest and most consequential frameworks for AI governance to date.

The context

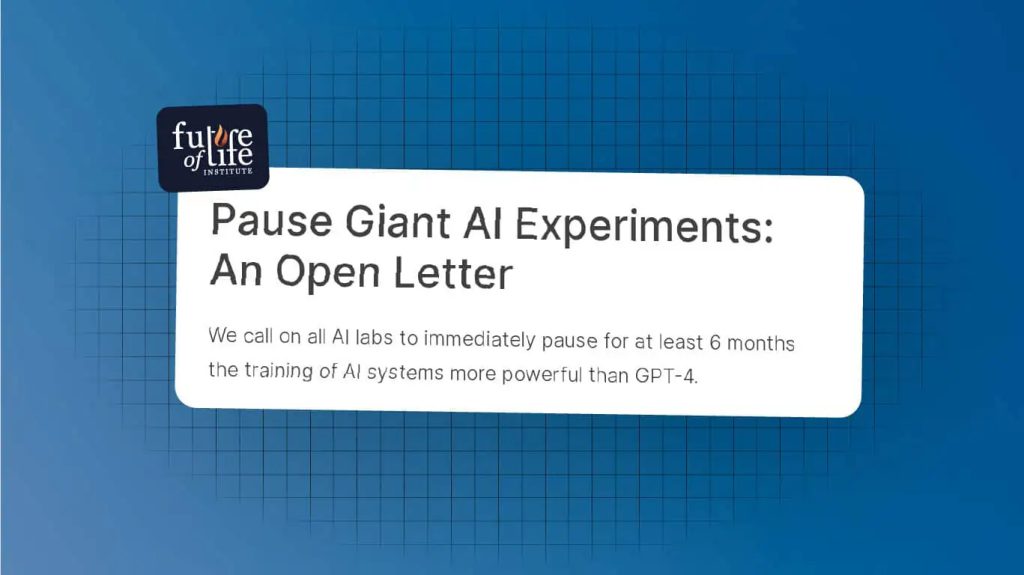

Even if Asilomar does not ring a bell, surely you have not escaped the open letter that was signed by thousands of AI experts, including SpaceX CEO Elon Musk calling for a six-month intermission in the training of AI systems surpassing the potency of GPT-4.

The letter opened with one of the Asilomar principles: “Advanced AI could represent a profound change in the history of life on Earth and should be planned for and managed with commensurate care and resources.”

Many conjectured that the genesis of this message lay in the emergence of the generative AI chatbot, ChatGPT-4, which had taken the digital landscape by storm. Since its release last November, the chatbot had ignited a frenzied arm race among tech giants to unveil similar tools.

Yet, underneath the relentless pursuit is some profound ethical and societal concerns around technologies that can conjure creations which eerily mimic the work of human beings with ease.

Up to the time of this open letter, many countries adopted a laissez-faire approach to the commercial development of AI.

Within a day after the release of this letter, Italy became the first western country to ban the use of OpenAI’s generative AI chatbot ChatGPT due to fear around privacy breach, although the ban was eventually lifted on April 28 as OpenAI met the demands of the regulator.

Reactions from the world

In the same week, US President Joe Biden met with his council of science and technology advisors to discuss the “risks and opportunities” of AI. He urged technology companies to ensure the utmost safety of their creations before releasing them to the eager public.

A month later, on May 4, the Biden-Harris administration announced a suite of actions designed to nurture responsible AI innovations that safeguard the rights and safety of the Americans. These measures encompassed a draft policy guidance on the development, procurement, and use of AI systems.

On the same day, the UK government said it would embark upon a thorough exploration of AI’s impact on consumers, businesses, and economy and whether new controls are needed.

On May 11, key EU lawmakers reached a consensus on the urgent need for stricter regulations pertaining to generative AI. They also advocated for a ban on the pervasive nature of facial surveillance, and will be voting on the draft of the EU’s AI Act later in June.

In China, regulators had already revealed draft measures in April to assert the management of generative AI services. The Chinese government wanted firms to submit comprehensive security assessments prior offering their products to the public. Nevertheless, the authority is keen to offer a supportive environment that propelled leading enterprises to forge AI models capable of challenging the likes of ChatGPT-4.

On a whole, most countries are either seeking input or planning regulations. However, as the boundaries of possibility continually shift, no expert can predict with confidence the precise sequences of developments and consequences that generative AI would bring.

In fact, the absence of precision and preparation is what challenges AI regulation and governance.

What about Singapore?

Last year, the Info-Communications Media Development Authority (IMDA) and Personal Data Protection Commission (PDPC) unveiled A.I. Verify – an AI governance testing framework and toolkit encouraging industries to embrace a newfound transparency in their deployment of AI.

A.I. Verify arrives in the form of a Minimum Viable Product (MVP), empowering enterprises to showcase the capabilities of their AI systems while concurrently taking robust measures to mitigate risks.

With an open invitation extended to companies around the globe to participate in the international pilot, Singapore hopes to fortify the existing framework by incorporating valuable insights garnered from diverse perspectives, and to actively contribute to the establishment of international standards.

Unlike other countries, Singapore recognises trust as the bedrock upon which AI’s ascendancy shall be built. A way to enhance trust is to communicate with utmost clarity and efficacy to all stakeholders – from regulators, enterprises, to auditors, consumers, and the public at large – about the multifaceted dimensions of AI applications.

Singapore acknowledges the possibility for cultural and geographical variations to shape the interpretation and implementation of universal AI ethics principles, leading to a fragmented AI governance framework.

As such, building trustworthy AI and having a framework to determine AI’s trustworthiness are deemed optimal at this stage of development.

Why do we need to regulate AI?

A cacophony of voices, like Elon Musk, Bill Gates, and even Stephen Hawking resounds a shared conviction: if we fail to adopt a proactive approach to the coexistence of machines and humanity, we may inadvertently sow the seeds of our own destruction.

Our society is already greatly impacted by an explosion of algorithms that skewed opinions, widened inequality, or triggered a flash crush in currency. As AI quickly matures and regulators stumble to keep pace, we may risk not having a set of relevant rules in place for decision-making that leaves us vulnerable.

As such, some experts refused to sign the open letter as they thought it has undermined the true magnitude of the situation and it’s asking too little for a change. Their logic is a sufficiently “intelligent” AI won’t be confined to computer systems for long.

With OpenAI’s intention to create an AI system that aligns with human values and intent, it’s just a matter of time before AI is “conscious” – having a powerful cognitive system that’s able to make independent decisions no different from a normal human being.

By then, it will make any regulatory framework that’s conjured based on the present AI systems obsolete.

Of course, even if we entertain these speculative views that sounds the echoes of sci-fi tales, other experts wondered if the field of AI remains in its nascent stages despite its remarkable boom.

They cautioned imposing stringent regulations may stifle the very innovation that drives us forward. Instead, a better understanding of AI’s potential must be sought before thinking about regulations.

Moreover, AI permeates many domains, each harbouring unique nuances and considerations, so it does not make sense to just have a general governance framework.

How should we regulate AI?

The conundrum that envelops AI is inherently unique. Unlike traditional engineering systems, where designers can confidently anticipate functionality and outcomes, AI operates within a realm of uncertainty.

This fundamental distinction necessitates a novel approach to regulatory frameworks, one that grapples with the complexities of AI’s failures and its propensity to exceed its intended boundaries. Accordingly, the attention has always revolved around controlling the applications of the technology.

At this juncture, the notion of exerting stricter control on the use of generative AI may appear perplexing as its integration into our daily lives grows ever more ubiquitous. As such, the collective gaze shifts towards the vital concept of transparency.

Experts want to devise standards on how AI should be crafted, tested, and deployed so that they can be subjected to a greater degree of external scrutiny, fostering an environment of accountability and trust. Others are contemplating the most powerful versions of AI to be left under restricted use.

Testifying before Congress on May 16, OpenAI’s CEO Sam Altman proposed a licensing regime to ensure AI models adhere to rigorous safety standards and undergo thorough vetting.

However, this could potentially lead to a situation where only a handful of companies, equipped with the necessary resources and capabilities, can effectively navigate the complex regulatory landscape and dictate how AI should be operated.

Tech and business personality Bernard Marr emphasised on the importance of not weaponising AI. Additionally, he highlighted the pressing need for an “off-switch”, a fail-safe mechanism that empowers human intervention in the face of AI’s waywardness.

Equally critical is the unanimous adoption of internationally mandated ethical guidelines by manufacturers, serving as a moral compass to guide their creations.

As appealing as these solutions may sound, the question of who holds the power to implement them and assign liability in case of mishaps involving AI or human beings remains unanswered.

In the midst of the alluring solutions and conflicting perspectives, one undeniable fact remains: the future of AI regulation stands at a critical juncture, waiting for humans to take decisive action, much like we eagerly await how AI will shape us.

Featured Image Credit: IEEE