Back in July, a team of researchers proved that ChatGPT is able to design a simple, producible microchip from scratch in under 100 minutes, following human instructions provided in plain English.

Last month, another group — working at universities in China and the US — decided to take a step further and cut the humans out of the creative process almost completely.

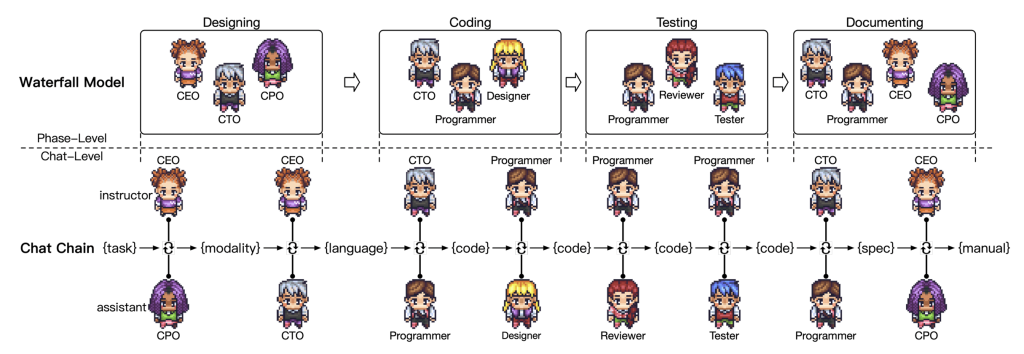

Instead of relying on a single chatbot providing answers to questions asked by a human, they created a team of ChatGPT 3.5-powered bots, each assuming a different role in a software agency: CEO, CTO, CPO, programmer, code reviewer, code tester, and graphics designer.

Each one was briefed about its role and provided details about their behaviour and requirements for communication with other participants, e.g. “designated task and roles, communication protocols, termination criteria, and constraints.”

Other than that, however, ChatDev’s — as the company was named — artificial intelligence (AI) team would have to come up with its own solutions, decide which languages to use, design the interface, test the output, and provide corrections if needed.

Once ready, the researchers then fed their virtual team with specific software development tasks and measured how it would perform both on accuracy and time required to complete each of them.

The dream CEO

The bots were to follow an established waterfall development model, with tasks broken up between designing, coding, testing, and documenting of work done, with each of them assigned their roles throughout the process.

What I found particularly interesting is the exclusion of CEO from the technical aspects of the process. His role is to provide the initial input and return for the summary, while leaving techies and designers to do their jobs in peace — quite unlike in the real world!

I think many people would welcome our new overlords, who are instructed not to interfere with the job until it’s really time for them to. Just think how many conflicts could be avoided!

Once the entire team was ready to go, the researchers then fed their virtual team with specific software development tasks and measured how it would perform both on accuracy and time required to complete each of them.

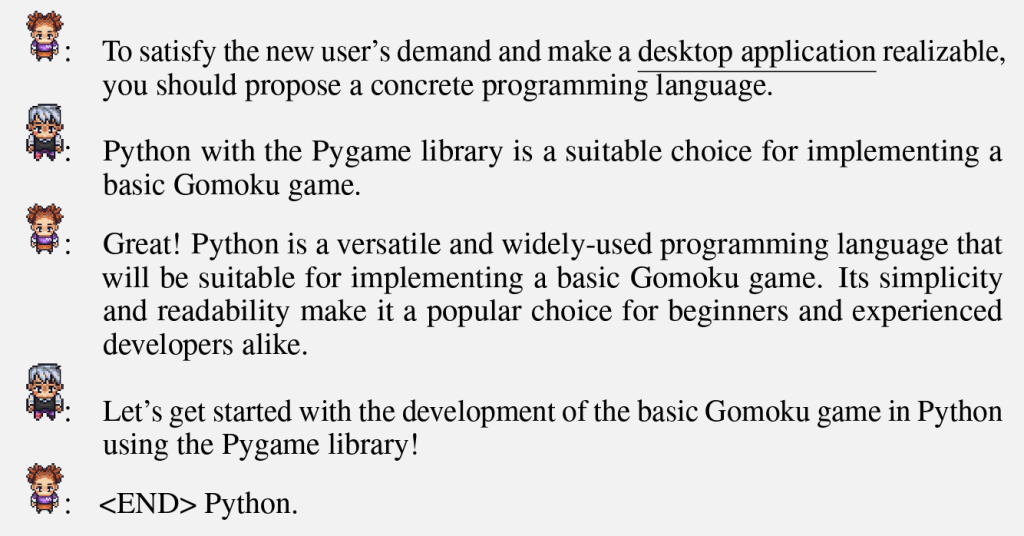

Here’s an example of fully artificial conversation between all of the “members”:

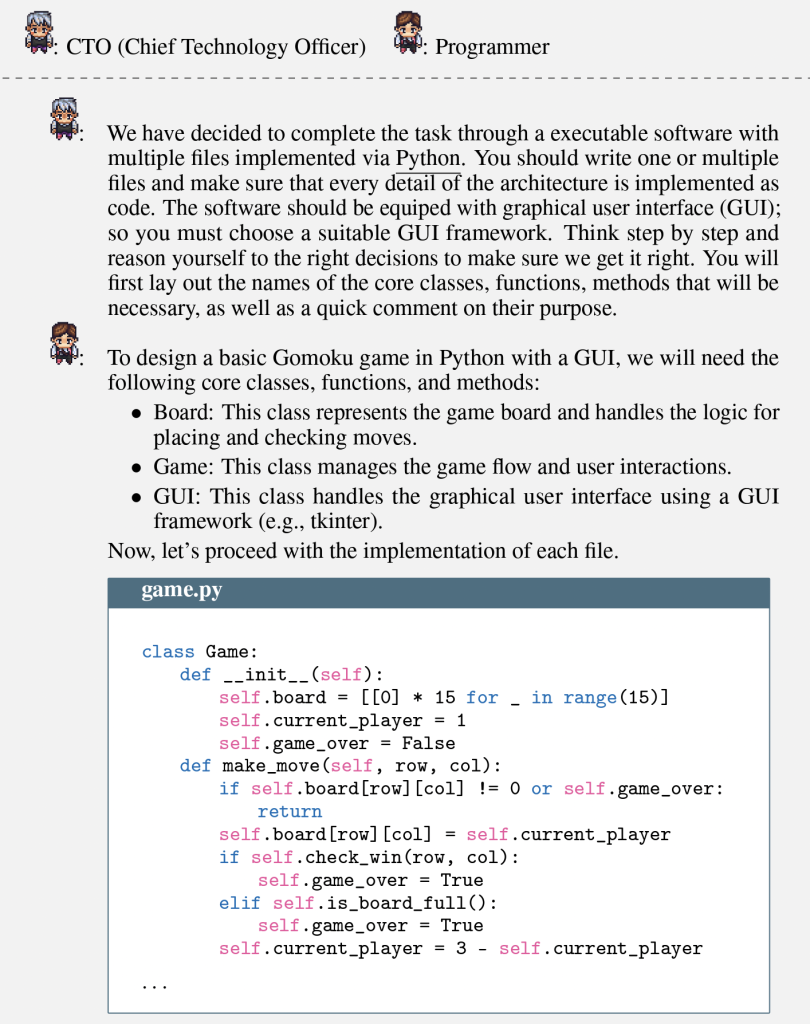

Later, followed by i.a. this exchange between the CTO and the programmer:

These conversations continued at each stage before its completion and information being passed for interface design, testing, and documentation (like creating a user manual).

Time is money

After running 70 different tasks through this virtual AI software dev company, over 86 per cent of the produced code was executed flawlessly. The remaining about 14 per cent faced hiccups due to broken external dependencies and limitations of ChatGPT’s API — so, it was not a flaw of the methodology itself.

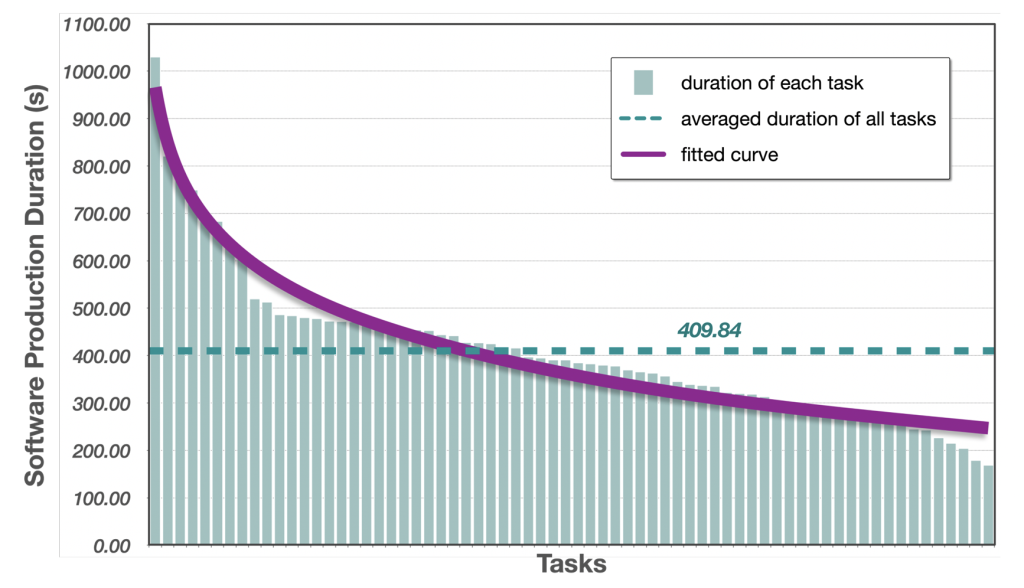

The longest time it took to complete a single task was measured at 1030 seconds, so a little over 17 minutes — with an average of just six minutes and 49 seconds across all tasks.

This, perhaps, is not all that telling yet. After all, there are many tasks, big and small, in software development, so the researchers put their findings in context:

“On average, the development of small-sized software and interfaces using CHATDEV took 409.84 seconds, less than seven minutes. In comparison, traditional custom software development cycles, even within agile software development methods, typically require two to four weeks, or even several months per cycle.”

At the very least, then, this approach could shave off weeks of typical development time — and we are only at the very beginning of the revolution, with still not very sophisticated AI bots (and this wasn’t even the latest version of ChatGPT).

And if time wasn’t enough of a saving, the basic costs of running each cycle with AI is just… $1. A dollar.

Even if we factor in the necessary setup and input information provided by humans, this approach still provides an opportunity for massive savings.

Goodbye programmers?

Perhaps soon, but not yet. Even the authors of the paper admit that even though the output produced by the bots was most often functional, it wasn’t always exactly what was expected (though it happens to humans too — just think of all the times you did exactly what the client asked and they were still furious).

They also recognised that AI itself may exhibit certain biases, and different settings it was deployed with were able to dramatically change output, in extreme cases rendering it unusable. In other words, setting the bots up correctly is a prerequisite to success. At least today.

So, for the time being, I think we’re going to see a rapid rise in human-AI cooperation rather than outright replacement.

However, it’s also difficult to escape the impression that through it we will be raising our successors and, in not so distant future, humans will be limited to only setting goals for AI to accomplish, while mastering programming languages will be akin to learning Latin.

You can download the full paper on arXiv.

Featured Image Credit: iLexx/depositphotos